Do you know what you should NEVER, and I mean NEVER, say to open-source

project authors? “I don't have time.” These two words can destroy a

developer’s motivation faster than an iPhone battery drains while scrolling

TikTok.

- “I don't have time to write a fix.”

- “I don't have time to create a bug report.”

- “This should be in the documentation, but I don’t have time to

write it.”

Really? REALLY?!

Imagine you're at a party, and someone says to you: “Hey, you with the

beer! Make me a sandwich. I don’t have time to make it myself, I’m too busy

eating chips.” How would you feel? Like a vending machine with a face?

That’s exactly how I feel when I read words like that. My motivation to

help vanishes instantly, and I feel the urge to do anything else — even

absolutely nothing.

You see, we open source developers are a peculiar breed. We spend hours of

our free time creating software that we then make available to everyone. For

free. Voluntarily. It’s like Santa Claus handing out gifts every day of the

year, not just on Christmas. We enjoy it. But that doesn’t give anyone the

right to boss us around like we’re some kind of digital slaves. So, when

someone comes with a request for a new feature but “doesn’t have time” to

contribute, it immediately raises the question, “Why should I have the time

then?” It’s like asking Michelangelo to paint your living room because you

“don’t have time” to do it yourself — as if he has nothing better

to do.

Over the years, I’ve accumulated dozens of issues across various projects

where I’ve asked, “Could you prepare a pull request?” and the reply was,

“I could, but I don’t have time this week.” If that poor soul hadn’t

written that sentence, I probably would’ve solved the issue long ago. But by

saying that, they basically told me they don’t value my time. So, did they fix

it themselves a week later? Not at all… 99% of the things people

promised to do were never delivered, which is why 99% of those issues remain

unresolved. They hang there like digital monuments to human laziness.

So, dear users, before you write “I don’t have time,” think again.

What you’re really saying is, “Hey, you! Your free time is worthless. Drop

everything you’re doing and deal with MY problem!” Instead, try this:

- Find the time. Trust me, it’s there. It might be hiding between episodes

of your favorite show or in the time you spend scrolling through

social media.

- Offer a solution. You don’t need to submit a full patch. Just show that

you’ve given it some real thought.

- Motivate open source maintainers to take up your issue. For example, by

showing how the change will be useful not just for you, but for the whole of

humanity and the surrounding universe.

Next time you find a bug, request a new feature, or notice something missing

from the documentation, try to help out the community in some way. Because in

the open-source world, we’re all in the same boat. And to keep it moving

forward, we all need to row. So don’t just sit there complaining that you

“don’t have time” to paddle — grab an oar and do your part. Saying

“I don’t have time” is the fastest way to kill the motivation of those

who are giving you free software. Try to carve out those few minutes or hours.

Your karma will thank you.

And conversely, was SQL the GPT of the seventies?

SQL, which emerged in the 1970s, represented a revolutionary breakthrough in

human-computer interaction. Its design aimed to make queries as readable and

writable as possible, resembling plain English. For instance, a query to fetch

names and salaries of employees in SQL might look like this:

SELECT name, salary FROM employee – simple and comprehensible,

right? This made databases accessible to a broader audience, not just

computer nerds.

Although this intention was commendable, it soon became clear that writing

SQL queries still required experts. Moreover, because a universal application

interface never emerged, using natural language for modern programming became

more of a burden. Programmers today communicate with databases by writing

complex SQL command generators, which databases then decode.

Enter GPT. These advanced language models bring a similar revolution in the

era of artificial intelligence as SQL did for databases in its time. They enable

interaction with databases on an entirely new level, 50 years advanced.

Although there is currently no database directly controlled by a language model,

we can use GPT to generate SQL. And it works great.

How to do it? Just copy the structure of your database, i.e., a dump with

CREATE TABLE commands, into ChatGPT and include the name of the

database server you are using. You can then ask questions directly

in Czech.

If you want to make using this technique even easier, use the prepared SQL Wizard, where you

just need to copy the database structure and you can start asking questions

right away without further explanation.

Let's see a practical example. We will use two database tables linked

through a foreign key. The users table will contain information

about users, and the orders table will store information about

orders made by users. Here are the definitions of these tables:

CREATE TABLE users (

id INT PRIMARY KEY,

name VARCHAR(100),

email VARCHAR(100)

);

CREATE TABLE orders (

order_id INT PRIMARY KEY,

user_id INT,

created_at DATE,

amount DECIMAL(10, 2),

FOREIGN KEY (user_id) REFERENCES users(id)

);

Copy these definitions into ChatGPT and you can immediately ask naturally and

in Czech, for example, about all orders from user Jan Novák. Specifically,

I would ask: “Write SQL that lists all orders from user Jan Novák.” And

GPT might respond like this:

SELECT orders.order_id, orders.created_at, orders.amount

FROM orders

JOIN users ON orders.user_id = users.id

WHERE users.name = 'Jan Novák';

Many programmers can, of course, write such an SQL query faster than logging

into ChatGPT 🙂 But let's try a slightly more complex example: “List users

who have only one order.”

SELECT users.id, users.name, users.email

FROM users

JOIN orders ON users.id = orders.user_id

GROUP BY users.id, users.name, users.email

HAVING COUNT(orders.order_id) = 1;

Or “List users who have no orders.”

SELECT users.*

FROM users

LEFT JOIN orders ON users.id = orders.user_id

WHERE orders.order_id IS NULL;

Here the fundamental difference in approach is evident. While with GPT,

I specify what data I want to get, in SQL, I have to describe how to get it.

And that requires knowledge and experience.

Thanks to GPT, you can focus on what you want to get, instead of how to get

it, which is especially valuable for those who are not SQL experts.

Finally, let's demonstrate how to use GPT to create database triggers. For

example, you can write: “Add a column order_count to the

users table that will track the number of orders. Initialize it to

the value corresponding to the current number of orders for each user. And

create a trigger that will update the value when an order is created or

deleted.”

GPT will generate queries for adding and initializing the

order_count column:

ALTER TABLE users ADD COLUMN order_count INT DEFAULT 0;

UPDATE users SET order_count = (SELECT COUNT(*) FROM orders WHERE orders.user_id = users.id);

and the required triggers to keep its value up to date:

CREATE TRIGGER update_order_count_after_insert

AFTER INSERT ON orders

FOR EACH ROW

BEGIN

UPDATE users

SET order_count = order_count + 1

WHERE id = NEW.user_id;

END;

CREATE TRIGGER update_order_count_after_delete

AFTER DELETE ON orders

FOR EACH ROW

BEGIN

UPDATE users

SET order_count = order_count - 1

WHERE id = OLD.user_id;

END;

GPT offers a way to work effectively and intuitively with databases, even for

those who are not SQL experts. It's a revolutionary tool that truly makes

advanced database operations accessible to the general public. However, it is

still crucial to carefully check each output to ensure data correctness and

security.

Software development often presents dilemmas, such as how to

handle situations when a getter has nothing to return. In this article, we'll

explore three strategies for implementing getters in PHP, which affect the

structure and readability of code, each with its own specific advantages and

disadvantages. Let's take a closer look.

Universal Getter with a

Parameter

The first solution, used in Nette, is to create a single getter method that

can either return null or throw an exception if the value is not

available, depending on a boolean parameter. Here is an example of what the

method might look like:

public function getFoo(bool $need = true): ?Foo

{

if (!$this->foo && $need) {

throw new Exception("Foo not available");

}

return $this->foo;

}

The main advantage of this approach is that it eliminates the need to have

several versions of the getter for different use cases. A former disadvantage

was the poor readability of user code using boolean parameters, but this has

been resolved with the introduction of named parameters, allowing you to write

getFoo(need: false).

However, this approach may cause complications in static analysis, as the

signature implies that getFoo() can return null under

any circumstances. Tools like PHPStan allow explicit documentation of method

behavior through special annotations, improving code understanding and its

correct analysis:

/** @return ($need is true ? Foo : ?Foo) */

public function getFoo(bool $need = true): ?Foo

{

}

This annotation clearly defines what return types the method

getFoo() can generate depending on the value of the parameter

$need. However, for instance, PhpStorm does not understand it.

Pair of Methods:

hasFoo() and getFoo()

Another option is to divide the responsibility into two methods:

hasFoo() to verify the existence of the value and

getFoo() to retrieve it. This approach enhances code clarity and is

intuitively understandable.

public function hasFoo(): bool

{

return (bool) $this->foo;

}

public function getFoo(): Foo

{

return $this->foo ?? throw new Exception("Foo not available");

}

The main problem is redundancy, especially in cases where the availability

check itself is a complex process. If hasFoo() performs complex

operations to verify if the value is available, and then this value is retrieved

again using getFoo(), these operations are repeated.

Hypothetically, the state of the object or data might change between the calls

to hasFoo() and getFoo(), leading to inconsistencies.

From a user's perspective, this approach may be less convenient as it forces

calling a pair of methods with repeating parameters. It also prevents the use of

the null-coalescing operator.

The advantage is that some static analysis tools allow defining a rule that

after a successful call to hasFoo(), no exception will be thrown in

getFoo().

Methods getFoo() and

getFooOrNull()

The third strategy is to split the functionality into two methods:

getFoo() to throw an exception if the value does not exist, and

getFooOrNull() to return null. This approach minimizes

redundancy and simplifies logic.

public function getFoo(): Foo

{

return $this->getFooOrNull() ?? throw new Exception("Foo not available");

}

public function getFooOrNull(): ?Foo

{

return $this->foo;

}

An alternative could be a pair getFoo() and

getFooIfExists(), but in this case, it might not be entirely

intuitive to understand which method throws an exception and which returns

null. A slightly more concise pair would be

getFooOrThrow() and getFoo(). Another possibility is

getFoo() and tryGetFoo().

Each of these approaches to implementing getters in PHP has its place

depending on the specific needs of the project and the preferences of the

development team. When choosing a suitable strategy, it's important to consider

the impact on readability, maintenance, and performance of the application. The

choice should reflect an effort to make the code as understandable and efficient

as possible.

Let's once and for all crack this eternal question that

divides the programming community. I decided to dive into the dark waters of

regular expressions to bring an answer (spoiler: yes, it's possible).

So, what exactly does an HTML document contain? It's a mix of text,

entities, tags, comments, and the special doctype tag. Let's first explore each

ingredient separately.

Entities

The foundation of an HTML page is text, which consists of ordinary characters

and special sequences called HTML entities. These can be either named, like

for a non-breaking space, or numerical, either in

decimal   or hexadecimal   format.

A regular expression capturing an HTML entity would look like this:

(?<entity>

&

(

[a-z][a-z0-9]+ # named entity

|

\#\d+ # decimal number

|

\#x[0-9a-f]+ # hexadecimal number

)

;

)

All regular expressions are written in extended mode, ignore case, and a

dot represents any character. That is, the modifier six.

These iconic elements make HTML what it is. A tag starts with

<, followed by the tag name, possibly a set of attributes, and

closes with > or />. Attributes can optionally

have a value, which can be enclosed in double, single, or no quotes. A regular

expression capturing an attribute would look like this:

(?<attribute>

\s+ # at least one white space before the attribute

[^\s"'<>=`/]+ # attribute name

(

\s* = \s* # equals sign before the value

(

" # value enclosed in double quotes

(

[^"] # any character except double quote

|

(?&entity) # or HTML entity

)*

"

|

' # value enclosed in single quotes

(

[^'] # any character except single quote

|

(?&entity) # or HTML entity

)*

'

|

[^\s"'<>=`]+ # value without quotes

)

)? # value is optional

)

Notice that I am referring to the previously defined

entity group.

Elements

An element can represent either a standalone tag (so-called void element) or

paired tags. There is a fixed list of void element names by which they are

recognized. A regular expression for capturing them would look like this:

(?<void_element>

< # start of the tag

( # element name

img|hr|br|input|meta|area|embed|keygen|source|base|col

|link|param|basefont|frame|isindex|wbr|command|track

)

(?&attribute)* # optional attributes

\s*

/? # optional /

> # end of the tag

)

Other tags are thus paired and captured by this regular expression (I use a

reference to the content group, which we will define later):

(?<element>

< # starting tag

(?<element_name>

[a-z][^\s/>]* # element name

)

(?&attribute)* # optional attributes

\s*

> # end of the starting tag

(?&content)*

</ # ending tag

(?P=element_name) # repeat element name

\s*

> # end of the ending tag

)

A special case is elements like <script>, whose content

must be processed differently from other elements:

(?<special_element>

< # starting tag

(?<special_element_name>

script|style|textarea|title # element name

)

(?&attribute)* # optional attributes

\s*

> # end of the starting tag

(?> # atomic group

.*? # smallest possible number of any characters

</ # ending tag

(?P=special_element_name)

)

\s*

> # end of the ending tag

)

The lazy quantifier .*? ensures that the expression stops at the

first ending sequence, and the atomic group ensures that this stop is

definitive.

A typical HTML comment starts with the sequence <!-- and

ends with -->. A regular expression for HTML comments might

look like this:

(?<comment>

<!--

(?> # atomic group

.*? # smallest possible number of any characters

-->

)

)

The lazy quantifier .*? again ensures that the expression stops

at the first ending sequence, and the atomic group ensures that this stop is

definitive.

Doctype

This is a historical relic that exists today only to switch the browser to

so-called standard mode. It usually looks like

<!doctype html>, but can contain other characters as well.

Here is the regular expression that captures it:

(?<doctype>

<!doctype

\s

[^>]* # any character except '>'

>

)

Putting It All Together

With the regular expressions ready for each part of HTML, it's time to

create an expression for the entire HTML 5 document:

\s*

(?&doctype)? # optional doctype

(?<content>

(?&void_element) # void element

|

(?&special_element) # special element

|

(?&element) # paired element

|

(?&comment) # comment

|

(?&entity) # entity

|

[^<] # character

)*

We can combine all the parts into one complex regular expression. This is

it, a superhero among regular expressions with the ability to parse

HTML 5.

Final Notes

Even though we have shown that HTML 5 can be parsed using regular

expressions, the provided example is not useful for processing an HTML

document. It will fail on invalid documents. It will be slow. And so on. In

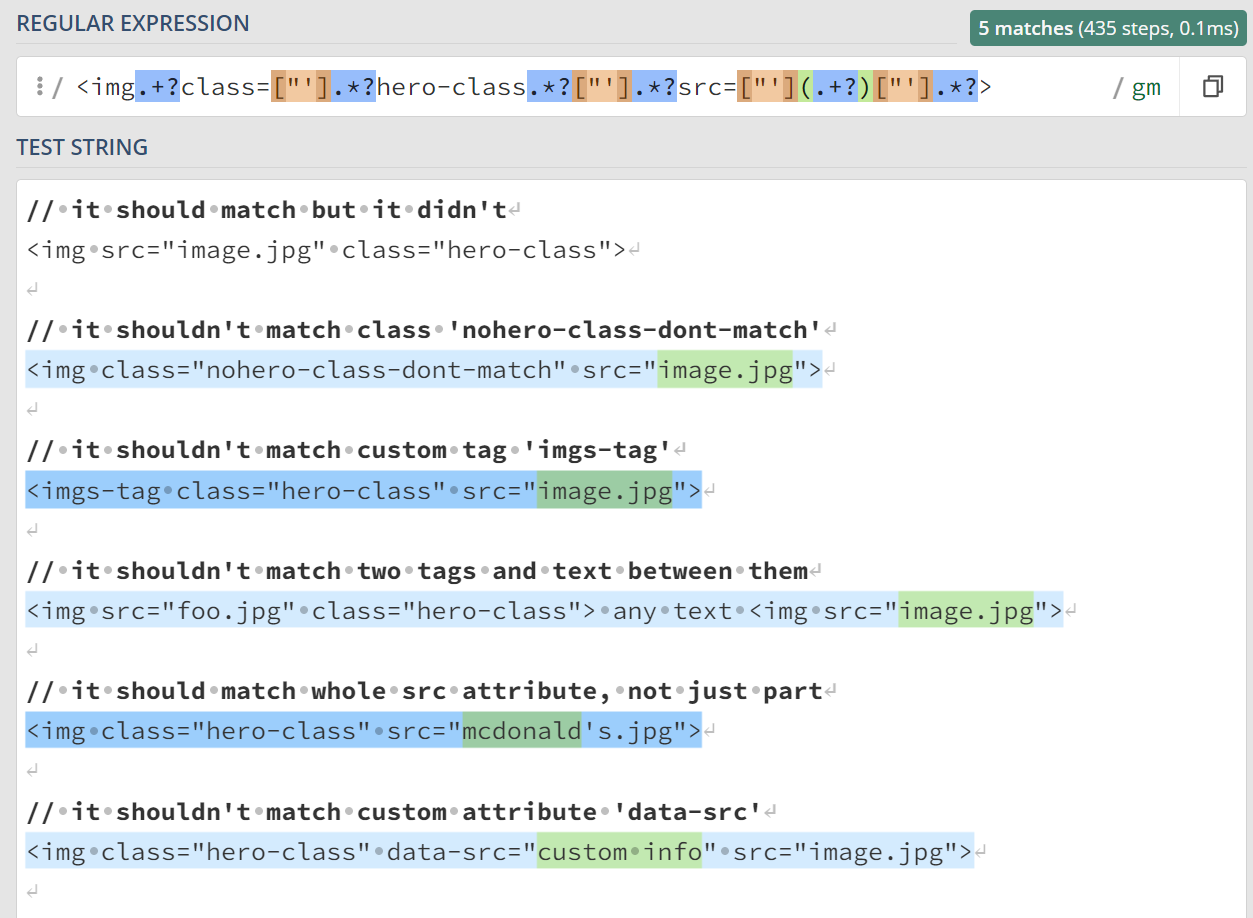

practice, regular expressions like the following are more commonly used (for

finding URLs of images):

<img.+?src=["'](.+?)["'].*?>

But this is a very unreliable solution that can lead to errors. This regexp

incorrectly matches custom tags

such as <imgs-tag src="image.jpg">, custom attributes like

<img data-src="custom info">, or fails when the attribute

contains a quote <img src="mcdonald's.jpg">. Therefore, it is

recommended to use specialized libraries. In the world of PHP, we're unlucky

because the DOM extension supports only the ancient, decaying HTML

4. Fortunately, PHP 8.4 promises an HTML 5 parser.

A video from Microsoft, intended to be a dazzling

demonstration of Copilot's capabilities, is instead a tragically comedic

presentation of the decline in programming craftsmanship.

I'm referring to this video.

It's supposed to showcase the abilities of GitHub Copilot, including how to use

it to write a regular expression for searching <img> tags

with the hero-image class. However, the original code being

modified is as holey as Swiss cheese, something I would be embarrassed to use.

Copilot gets carried away and instead of correcting, continues in the

same vein.

The result is a regular expression that unintentionally matches other

classes, tags, attributes, and so on. Worse still, it fails if the

src attribute is listed before class.

I write about this because this demonstration of shoddy work, especially

considering the official nature of the video, is startling. How is it possible

that none of the presenters or their colleagues noticed this? Or did they notice

and decide it didn't matter? That would be even more disheartening. Teaching

programming requires precision and thoroughness, without which incorrect

practices can easily be propagated. The video was meant to celebrate the art of

programming, but I see in it a bleak example of how the level of programming

craftsmanship is falling into the abyss of carelessness.

Just to give a bit of a positive spin: the video does a good job of showing

how Copilot and GPT work, so you should definitely give it a look 🙂

Are you looking to dive into the world of Object-Oriented

Programming in PHP but don't know where to start? I have for you a new

concise guide to OOP that will introduce you to all the concepts like

class, extends, private, etc.

In this guide, you will learn about:

- class and object

- namespaces

- inheritance versus composition

- visibility

- the

final keyword

- static properties, methods, and constants

- interfaces or abstract classes

- type checking

- Fluent Interfaces

- traits

- and how exceptions work

This guide is not intended to make you a master of writing clean code or to

provide exhaustive information. Its goal is to quickly familiarize you with the

basic concepts of OOP in current PHP and to give you factually correct

information. Thus, it provides a solid foundation on which you can further

build, such as applications in Nette.

As further reading, I recommend the detailed guide to

proper code design. It is beneficial even for those who are proficient in

PHP and object-oriented programming.

Programming in PHP has always been a bit of a challenge, but fortunately, it

has undergone many changes for the better. Do you remember the times before PHP

7, when almost every error meant a fatal error, instantly terminating the

application? In practice, this meant that any error could completely stop the

application without giving the programmer a chance to catch it and respond

appropriately. Tools like Tracy used magical tricks to visualize and log such

errors. Fortunately, with the arrival of PHP 7, this changed. Errors now throw

exceptions like Error, TypeError, and ParseError, which can be easily caught and

handled.

However, even in modern PHP, there is a weak spot where it behaves the same

as in its fifth version. I am talking about errors during compilation. These

cannot be caught and immediately lead to the termination of the application.

They are E_COMPILE_ERROR level errors. PHP generates around two hundred of them.

It creates a paradoxical situation where loading a file with a syntax error in

PHP, such as a missing semicolon, throws a catchable ParseError exception.

However, if the code is syntactically correct but contains a

compilation-detectable error (like two methods with the same name), it results

in a fatal error that cannot be caught.

try {

require 'path_to_file.php';

} catch (ParseError $e) {

echo "Syntactic error in PHP file";

}

Unfortunately, we cannot internally verify compilation errors in PHP. There

was a function php_check_syntax(), which, despite its name,

detected compilation errors as well. It was introduced in PHP 5.0.0 but quickly

removed in version 5.0.4 and has never been replaced since. To verify the

correctness of the code, we must rely on a command-line linter:

php -l file.php

From the PHP environment, you can verify code stored in the variable

$code like this:

$code = '... PHP code to verify ...';

$process = proc_open(

PHP_BINARY . ' -l',

[['pipe', 'r'], ['pipe', 'w'], ['pipe', 'w']],

$pipes,

null,

null,

['bypass_shell' => true],

);

fwrite($pipes[0], $code);

fclose($pipes[0]);

$error = stream_get_contents($pipes[1]);

if (proc_close($process) !== 0) {

echo 'Error in PHP file: ' . $error;

}

However, the overhead of running an external PHP process to verify one file

is quite large. But good news comes with PHP version 8.3, which will allow

verifying multiple files at once:

php -l file1.php file2.php file3.php

PHP users have been waiting for the ?? operator for an

incredibly long time, perhaps ten years. Today, I regret that it took

longer.

- Wait, what? Ten years? You're exaggerating, aren't you?

- Really. Discussion started in 2004 under the name “ifsetor”. And it

didn't make it into PHP until December 2015 in version 7.0. So almost

12 years.

- Aha! Oh, man.

It's a pity we didn't wait longer. Because it doesn't fit into the

current PHP.

PHP has made an incredible shift towards strictness since 7.0. Key

moments:

The ?? operator simplified the annoying:

isset($somethingI[$haveToWriteTwice]) ? $somethingI[$haveToWriteTwice] : 'default value'

to just:

$write[$once] ?? 'default value'

But it did this at a time when the need to use isset() has

greatly diminished. Today, we more often assume that the data we access exists.

And if they don't exist, we damn well want to know about it.

But the ?? operator has the side effect of being able to detect

null. Which is also the most common reason to use it:

$len = $this->length ?? 'default value'

Unfortunately, it also hides errors. It hides typos:

// always returns 'default value', do you know why?

$len = $this->lenght ?? 'default value'

In short, we got ?? at the exact moment when, on the contrary,

we would most need to shorten this:

$somethingI[$haveToWriteTwice] === null

? 'default value'

: $somethingI[$haveToWriteTwice]

It would be wonderful if PHP 9.0 had the courage to modify the behavior of

the ?? operator to be a bit more strict. Make the “isset

operator” really a “null coalesce operator”, as it is officially called by

the way.

PHPStan and checkDynamicProperties:

true helps you to detect typos suppressed by the ??

operator.

You've probably encountered the “tabs vs. spaces” debate for indentation

before. This argument has been around for ages, and both sides present their

reasons:

Tabs:

- Indenting is their purpose

- Smaller files, as indentation takes up one character

- You can set your own indentation width (more on this later)

Spaces:

- Code will look the same everywhere, and consistency is key

- Avoid potential issues in environments sensitive to whitespace

But what if it's about more than personal preference? ChaseMoskal recently

posted a thought-provoking entry on Reddit titled Nobody

talks about the real reason to use tabs instead of spaces that might open

your eyes.

The Main Reason to Use Tabs

Chase describes his experience with implementing spaces at his workplace and

the negative impacts it had on colleagues with visual impairments.

One of them was accustomed to using a tab width of 1 to avoid large

indentations when using large fonts. Another uses a tab width of 8 because it

suits him best on an ultra-wide monitor. For both, however, code with spaces

poses a serious problem, requiring them to convert spaces to tabs before reading

and back to spaces before committing.

For blind programmers who use Braille displays, each space represents one

Braille cell. Therefore, if the default indentation is 4 spaces, a third-level

indentation wastes 12 precious Braille cells even before the start of the code.

On a 40-cell display, which is most commonly used with laptops, this is more

than a quarter of the available cells, wasted without conveying any

information.

Adjusting the width of indentation may seem trivial to us, but for some

programmers, it is absolutely essential. And that’s something we simply

cannot ignore.

By using tabs in our projects, we give them the opportunity for this

adjustment.

Accessibility First,

Then Personal Preference

Sure, not everyone can be persuaded to choose one side over the other when it

comes to preferences. Everyone has their own. And we should appreciate the

option to choose.

However, we must ensure that we consider everyone. We should respect

differences and use accessible means. Like the tab character, for instance.

I think Chase put it perfectly when he mentioned in his post that

“…there is no counterargument that comes close to outweighing the

accessibility needs of our colleagues.”

Accessible First

Just as the “mobile first” methodology has become popular in web design,

where we ensure that everyone, regardless of device, has a great user experience

with your product – we should strive for an “accessible first”

environment by ensuring that everyone has the same opportunity to work with

code, whether in employment or on an open-source project.

If tabs become the default choice for indentation, we remove one barrier.

Collaboration will then be pleasant for everyone, regardless of their abilities.

If everyone has the same opportunities, we can fully utilize our collective

potential ❤️

This article is based on Default

to tabs instead of spaces for an ‘accessible first’ environment. I read

a similarly convincing post in 2008 and changed from spaces to tabs in all my

projects that very day. It left a trace

in Git, but the article itself has disappeared into the annals of

history.

As of version 3.1.6, the Texy library adds support for Latte

3 in the form of the {texy} tag. What can it do and how do you

deploy it?

The {texy} tag represents an easy way to write directly in Texy

syntax in Latte templates:

{texy}

You Already Know the Syntax

----------

No kidding, you know Latte syntax already. **It is the same as PHP syntax.**

{/texy}

Simply install the extension in Latte and pass it a Texy object configured as

needed:

$texy = new Texy\Texy;

$latte = new Latte\Engine;

$latte->addExtension(new Texy\Bridges\Latte\TexyExtension($texy));

If there is static text between the {texy}...{/texy} tags, it is

translated using Texy during the template compilation and the result is stored

in it. If the content is dynamic (i.e., there are Latte tags inside), the

processing using Texy is performed each time the template is rendered.

If it is desirable to disable Latte tags inside, it can be done

like this:

{texy syntax: off} ... {/texy}

In addition to the Texy object, a custom function can also be passed to the

extension, thus allowing parameters to be passed from the template. For

instance, we might want to pass the parameters locale and

heading:

$processor = function (string $text, int $heading = 1, string $locale = 'cs'): string {

$texy = new Texy\Texy;

$texy->headingModule->top = $heading;

$texy->typographyModule->locale = $locale;

return $texy->process($text);

};

$latte = new Latte\Engine;

$latte->addExtension(new Texy\Bridges\Latte\TexyExtension($processor));

Parameters in the template are passed like this:

{texy locale: en, heading: 3}

...

{/texy}

If you want to format text stored in a variable using Texy, you can use a

filter:

{$description|texy}